Like Interstate Crosscheck, Trump's "Extreme Vetting" Software to be Used as Pretext to Discriminate Against Non-White Immigrants

/From [TheIntercept] THIS PAST AUGUST, technology firms lined up to find out how they could help build a computerized reality of President Donald Trump’s vague, hateful vision of “extreme vetting” for immigrants. At a Department of Homeland Security event, and via related DHS documents, both first reported by The Intercept, companies like IBM, Booz Allen Hamilton, and Red Hat learned what sort of software the government needed to support its “Extreme Vetting Initiative.”

Today, a coalition of more than 100 civil rights and technology groups, as well as prominent individuals in those fields, has formed to say that it is technically impossible to build software that meets DHS’s vetting requirements — and that code that attempts to do so will end up promoting discrimination and undermining civil liberties.

The new opposition effort, organized by legal scholars Alvaro Bedoya, executive director of Georgetown Law’s Center on Privacy & Technology, and Rachel Levinson-Waldman, senior counsel at NYU’s Brennan Center for Justice, includes two letters, one on the technical futility of trying to implement extreme-vetting software and another on the likely disturbing social fallout of any such attempt.

The first letter, signed by 54 computer scientists, engineers, mathematicians, and other experts in machine learning, data mining, and other forms of automated decision-making, says the signatories have “grave concerns” about the Extreme Vetting Initiative. As described in documents posted by DHS’s Immigration and Customs Enforcement branch, the initiative requires software that can automate and accelerate the tracking and assessment of foreigners in the U.S., in part through a feature that can “determine and evaluate an applicant’s probability of becoming a positively contributing member of society, as well as their ability to contribute to national interests.”

In 2017, this is a tall order for any piece of software. In the letter, experts affiliated with institutions like Google, MIT, and Berkeley, say the plan just won’t work: “Simply put, no computational methods can provide reliable or objective assessments of the traits that ICE seeks to measure,” the letter states. “In all likelihood, the proposed system would be inaccurate and biased.” Any attempt to use algorithms to assess who is and isn’t a “positively contributing member of society,” the letter argues, would be a muddled failure at best:

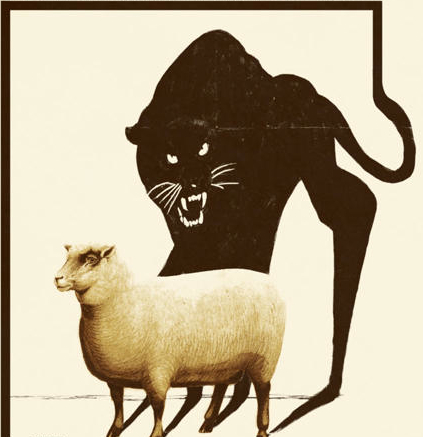

Algorithms designed to predict these undefined qualities could be used to arbitrarily flag groups of immigrants under a veneer of objectivity.

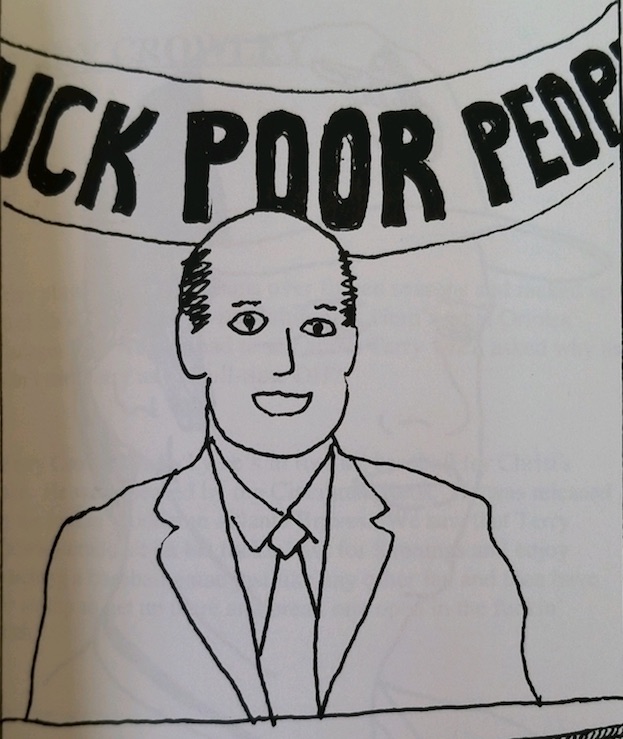

Inevitably, because these characteristics are difficult (if not impossible) to define and measure, any algorithm will depend on “proxies” that are more easily observed and may bear little or no relationship to the characteristics of interest. For example, developers could stipulate that a Facebook post criticizing U.S. foreign policy would identify a visa applicant as a threat to national interests. They could also treat income as a proxy for a person’s contributions to society, despite the fact that financial compensation fails to adequately capture people’s roles in their communities or the economy.

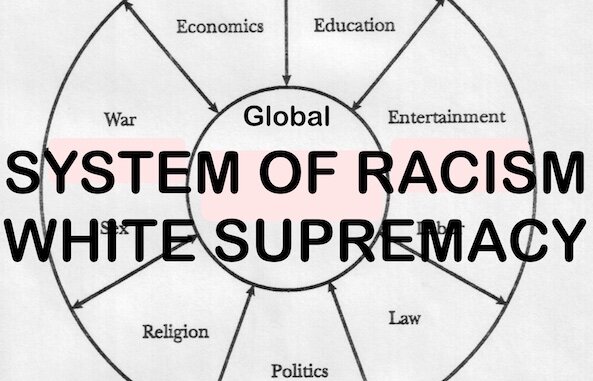

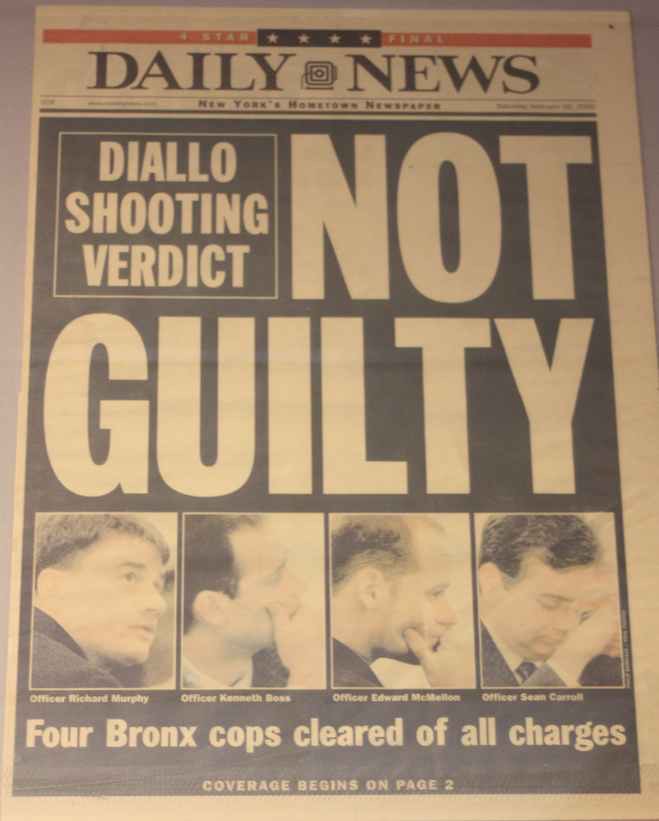

But more worryingly, it could be a machine for racial and religious bias. David Robinson, a signatory to the first letter and managing director of technology and civil rights think tank Upturn, said in an interview that the government is essentially asking private technology companies to “boil down to numbers” completely fuzzy notions of “what counts as a contribution to the national interest — in effect, they’ll be making it up out of whole cloth.” Corporate programmers could decide that wealth and income are key measures, thus shutting out poorer immigrants, or look at native language or family size, tilting the scales against people of certain cultures. As has proven to be the case with Facebook, for example, vast groups of people could be subject to rules kept inside a black box of code held up as an impartial arbiter. A corporate contractor chosen for the project, Robinson explained, could simply “tweak the formula until they like the answers” and then “bake it into a computer and say it’s objective, data-driven, based on numbers. I’m afraid this could easily become a case of prejudice dressed up as science.”

Another concern is the danger of extrapolating common characteristics from the vanishingly small proportion of immigrants who have attempted to participate in terrorism. “There are too few examples,” said Robinson. “We can’t make reliable statistical generalizations.” But that isn’t stopping ICE from asking that it be so. Kristian Lum, lead statistician at the Human Rights Data Analysis Group (and letter signatory), fears that “in order to flag even a small proportion of future terrorists, this tool will likely flag a huge number of people who would never go on to be terrorists,” and that “these ‘false positives’ will be real people who would never have gone on to commit criminal acts but will suffer the consequences of being flagged just the same.”