NYU Researchers Warn Against Police Use of Facial "Affect" Recognition Technology Designed to Predict Criminality Based on Physical Characteristics - Prone to Error & Not Based on Any Science

/From [The INTERCEPT] FACIAL RECOGNITION has quickly shifted from techno-novelty to fact of life for many, with millions around the world at least willing to put up with their faces scanned by software at the airport, their iPhones, or Facebook’s server farms. But researchers at New York University’s AI Now Institute have issued a strong warning against not only ubiquitous facial recognition, but its more sinister cousin: so-called affect recognition, technology that claims it can find hidden meaning in the shape of your nose, the contours of your mouth, and the way you smile. If that sounds like something dredged up from the 19th century, that’s because it sort of is.

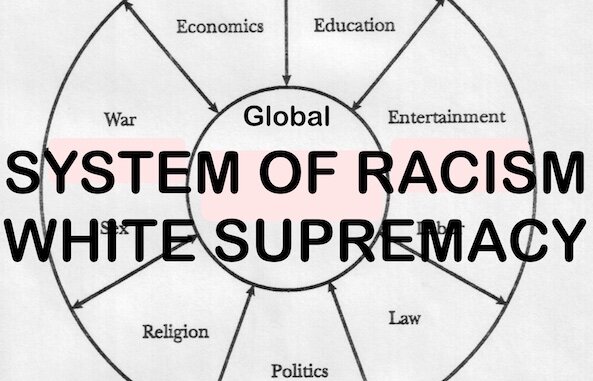

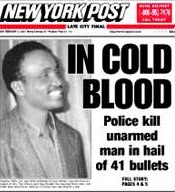

AI Now’s 2018 report is a 56-page record of how “artificial intelligence” — an umbrella term that includes a myriad of both scientific attempts to simulate human judgment and marketing nonsense — continues to spread without oversight, regulation, or meaningful ethical scrutiny. The report covers a wide expanse of uses and abuses, including instances of racial discrimination, police surveillance, and how trade secrecy laws can hide biased code from an AI-surveilled public. But AI Now, which was established last year to grapple with the social implications of artificial intelligence, expresses in the document particular dread over affect recognition, “a subclass of facial recognition that claims to detect things such as personality, inner feelings, mental health, and ‘worker engagement’ based on images or video of faces.” The thought of your boss watching you through a camera that uses machine learning to constantly assess your mental state is bad enough, while the prospect of police using “affect recognition” to deduce your future criminality based on “micro-expressions” is exponentially worse.

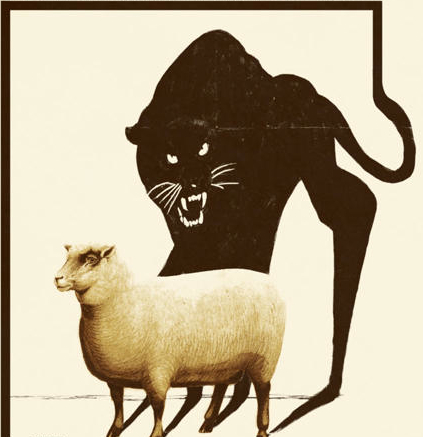

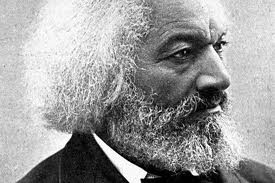

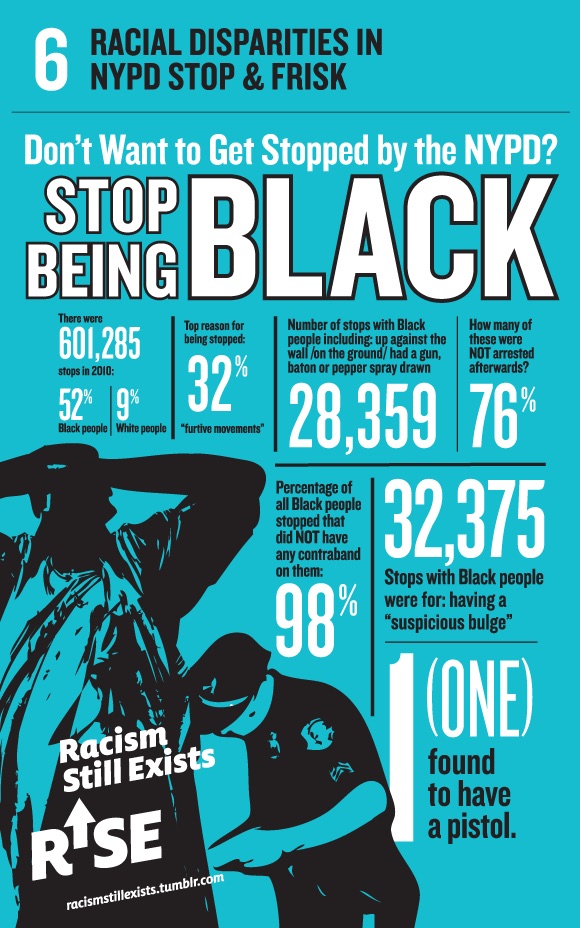

That’s because “affect recognition,” the report explains, is little more than the computerization of physiognomy, a thoroughly disgraced and debunked strain of pseudoscience from another era that claimed a person’s character could be discerned from their bodies — and their faces, in particular. There was no reason to believe this was true in the 1880s, when figures like the discredited Italian criminologist Cesare Lombroso promoted the theory, and there’s even less reason to believe it today. Still, it’s an attractive idea, despite its lack of grounding in any science, and data-centric firms have leapt at the opportunity to not only put names to faces, but to ascribe entire behavior patterns and predictions to some invisible relationship between your eyebrow and nose that can only be deciphered through the eye of a computer. Two years ago, students at a Shanghai university published a report detailing what they claimed to be a machine learning method for determining criminality based on facial features alone. The paper was widely criticized, including by AI Now’s Kate Crawford, who told The Intercept it constituted “literal phrenology … just using modern tools of supervised machine learning instead of calipers.”

Crawford and her colleagues are now more opposed than ever to the spread of this sort of culturally and scientifically regressive algorithmic prediction: “Although physiognomy fell out of favor following its association with Nazi race science, researchers are worried about a reemergence of physiognomic ideas in affect recognition applications,” the report reads. “The idea that AI systems might be able to tell us what a student, a customer, or a criminal suspect is really feeling or what type of person they intrinsically are is proving attractive to both corporations and governments, even though the scientific justifications for such claims are highly questionable, and the history of their discriminatory purposes well-documented.”

In an email to The Intercept, Crawford, AI Now’s co-founder and distinguished research professor at NYU, along with Meredith Whittaker, co-founder of AI Now and a distinguished research scientist at NYU, explained why affect recognition is more worrying today than ever, referring to two companies that use appearances to draw big conclusions about people. “From Faception claiming they can ‘detect’ if someone is a terrorist from their face to HireVue mass-recording job applicants to predict if they will be a good employee based on their facial ‘micro-expressions,’ the ability to use machine vision and massive data analysis to find correlations is leading to some very suspect claims,” said Crawford.

Faception has purported to determine from appearance if someone is “psychologically unbalanced,” anxious, or charismatic, while HireVue has ranked job applicants on the same basis.

As with any computerized system of automatic, invisible judgment and decision-making, the potential to be wrongly classified, flagged, or tagged is immense with affect recognition, particularly given its thin scientific basis: “How would a person profiled by these systems contest the result?,” Crawford added. “What happens when we rely on black-boxed AI systems to judge the ‘interior life’ or worthiness of human beings? Some of these products cite deeply controversial theories that are long disputed in the psychological literature, but are are being treated by AI startups as fact.”

What’s worse than bad science passing judgment on anyone within camera range is that the algorithms making these decisions are kept private by the firms that develop them, safe from rigorous scrutiny behind a veil of trade secrecy. AI Now’s Whittaker singles out corporate secrecy as confounding the already problematic practices of affect recognition: “Because most of these technologies are being developed by private companies, which operate under corporate secrecy laws, our report makes a strong recommendation for protections for ethical whistleblowers within these companies.” Such whistleblowing will continue to be crucial, wrote Whittaker, because so many data firms treat privacy and transparency as a liability, rather than a virtue: “The justifications vary, but mostly [AI developers] disclaim all responsibility and say it’s up to the customers to decide what to do with it.” Pseudoscience paired with state-of-the-art computer engineering and placed in a void of accountability. What could go wrong?