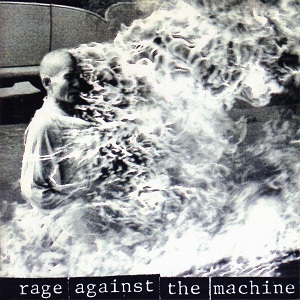

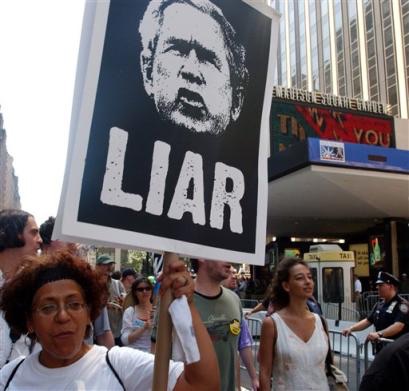

A Stanford Report claims the US Government Used Sock Puppet Accounts to Spread Disinformation to Social Media Users in Russia, China and Iran to Manufacture Support for Ukraine Intervention

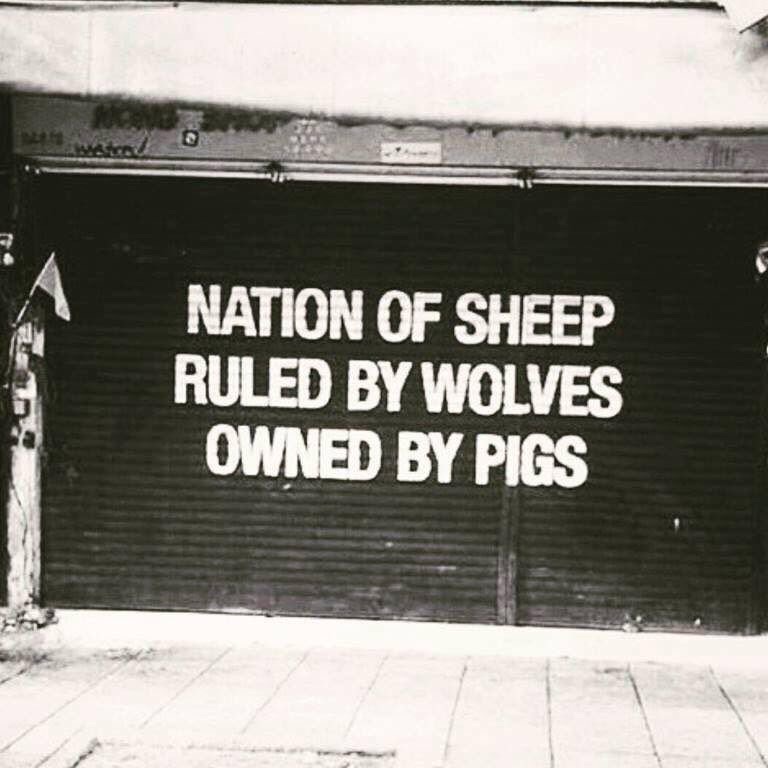

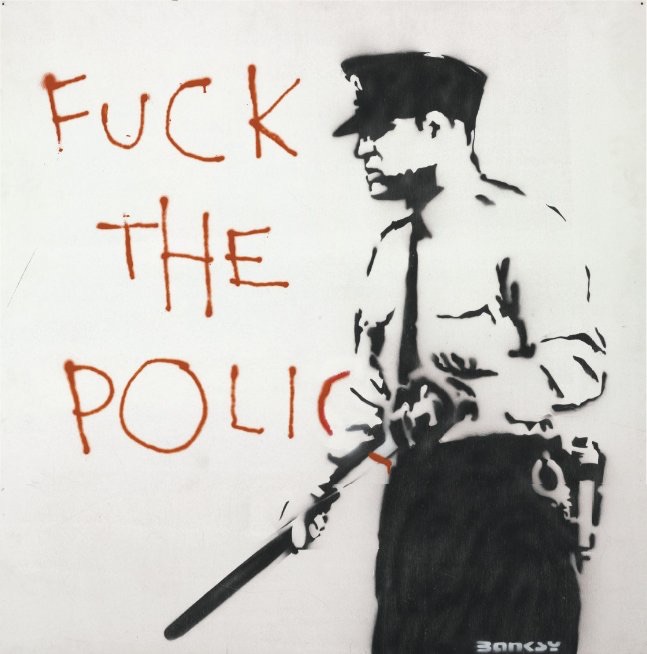

/From [HERE] The moral high ground is almost impossible to hold. The United States has portrayed itself as the world ideal for personal freedom and government accountability, despite those holding power working tirelessly to undermine both of those ideals.

It’s not that other world governments aren’t as bad or worse. It’s that “whataboutism” isn’t an excuse for navigating the same proverbial gutters in pursuit of end goals or preferred narratives. The ends don’t justify the means. The fact that other countries violate rights more often (or more extremely) doesn’t excuse our own.

Hypocrisy and government entities are never separated by much distance. Our government has fought a War on Drugs for years, publicly proclaiming the menace created by the voluntary exchange of money for goods while privately leveraging drug sales to supply weapons to the US’s preferred revolutionaries or simply to ensure local law enforcement agencies have access to funding options that operate outside of oversight restraints.

The same government that has frequently called out other countries for disinformation campaigns and election disruption has engaged in coups and deployed its own media (social and otherwise) weaponry to push an American narrative on foreigners.

Under President Biden, the administration (briefly) formed a “Disinformation Governance Board” under the oversight of the DHS. The intent was to prevent foreign disruption of elections and other issues of public concern. The intent may have been pure, but the reality was Orwellian. Fortunately, it was quickly abandoned.

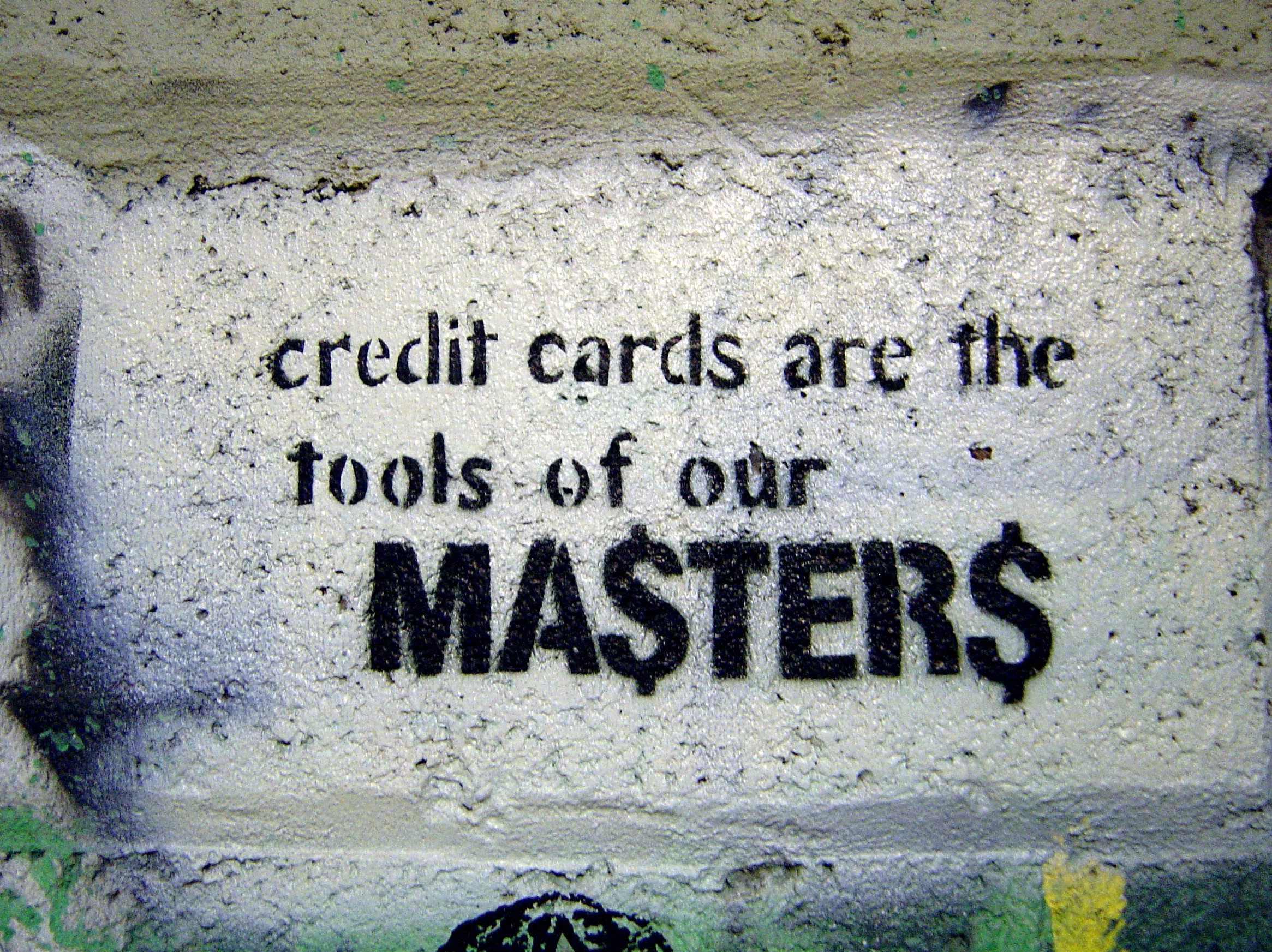

But while the federal government sought ways to respond to the disinformation spread by foreign, often state-sponsored, entities, it was apparently doing the same thing itself, as Lucas Ropek reports for Gizmodo.

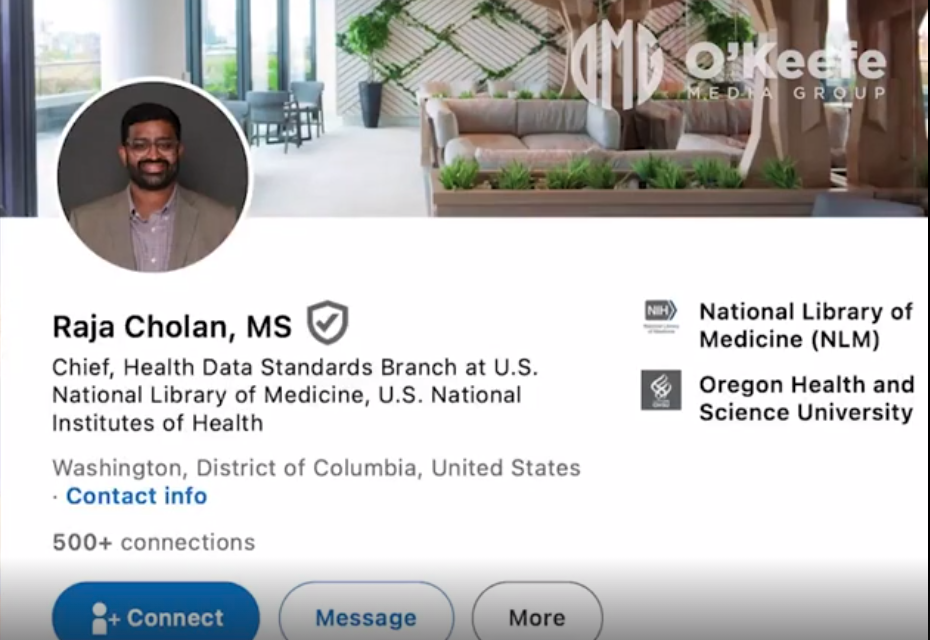

In July and August, Twitter and Meta announced that they had uncovered two overlapping sets of fraudulent accounts that were spreading inauthentic content on their platforms. The companies took the networks down but later shared portions of the data with academic researchers. On Wednesday, the Stanford Internet Observatory and social media analytics firm Graphika published a joint study on the data, revealing that the campaigns had all the markings of a U.S. influence network.

According to the report [PDF], the US was engaged in a “pro-US influence operation” targeting platform users in Russia, China, and Iran. Specific attribution is apparently impossible at this point, but evidence points to the use of US government-created sock puppet accounts (with possible assistance of the UK government) to win hearts and minds (however illegitimately) in countries very much opposed to direct US intervention.

It was not a small operation. Although likely dwarfed by operations originating in China and Russia, the US government leveraged dozens of accounts to produce hundreds of thousands of posts reflecting the preferred narrative of the United States.

Twitter says that some 299,566 tweets were sent by 146 fake accounts between March 2012 and February 2022. Meanwhile, the Meta dataset shared with researchers included “39 Facebook profiles, 16 pages, two groups, and 26 Instagram accounts active from 2017 to July 2022,” the report says.

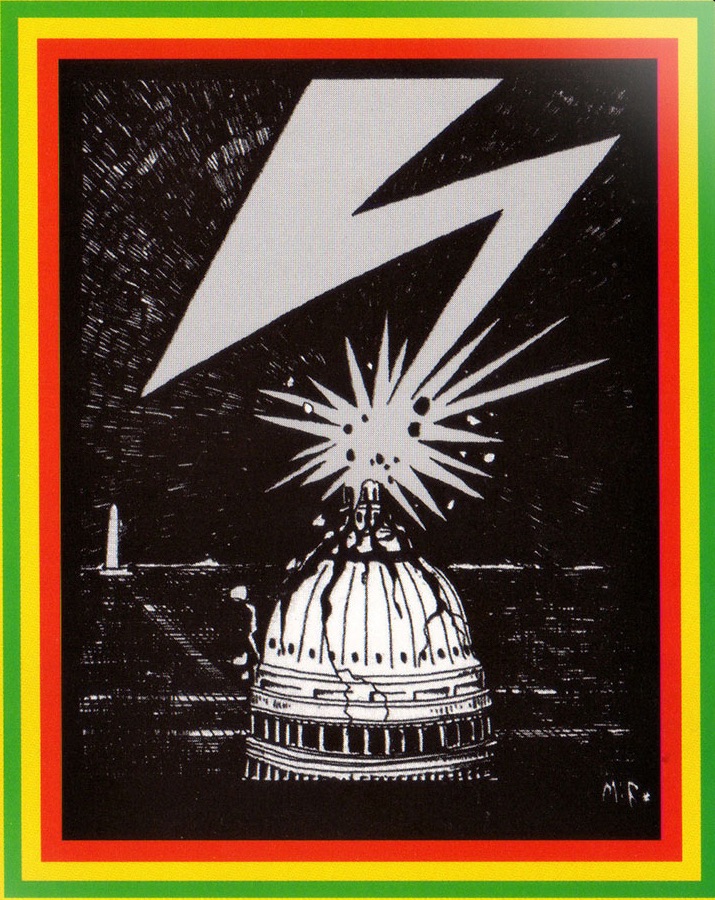

Being American doesn’t mean being better. As the authors of the report note, most of the effort was low effort: AI-generated profile photos, memes, and political cartoons were all in play. But, more notably, the accounts spread content from distinctly American government-related services like Voice of America and Radio Free Europe.

This is not to say the United States government is wrong to combat disinformation being spread by countries often considered to be enemies. But it should do so through official outlets, not faux accounts represented by AI-generated photos and unquestioning regurgitation of US government-generated content. Splashing around in the disinformation sewer doesn’t make any participant any better. It just ensures every entity that does so will get dirty.