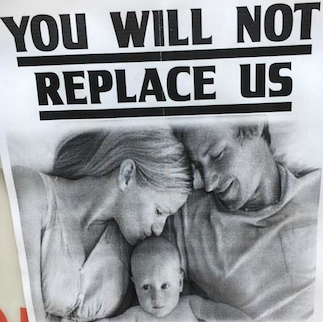

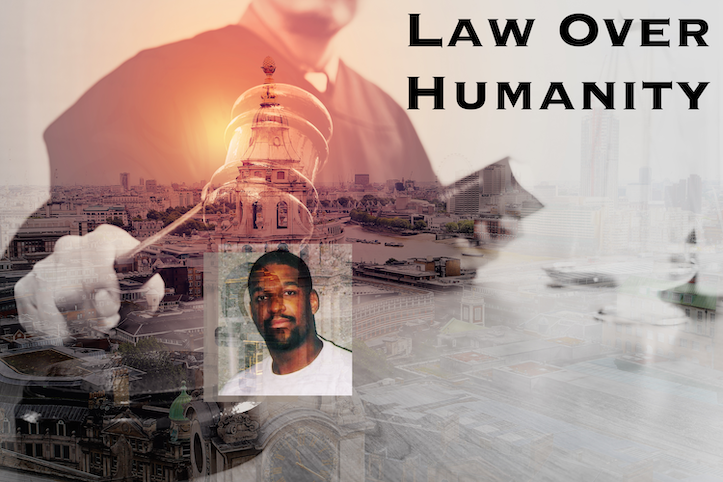

Facial Recognition Technology Cannot Tell Black People Apart (and maybe that's the point) and Police Use It Disproportionately to Arrest Black People, according to a Georgia State University Study

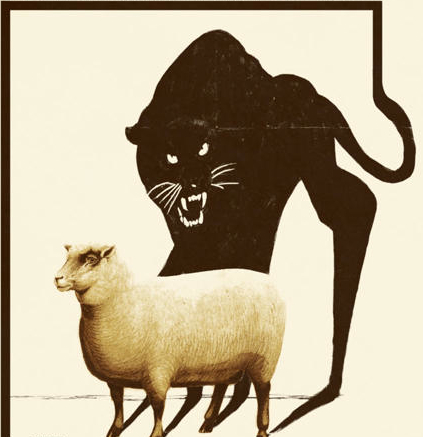

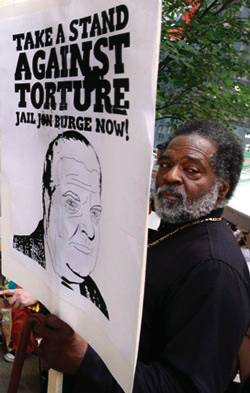

/From [HERE] Imagine being handcuffed in front of your neighbors and family for stealing watches. After spending hours behind bars, you learn that the facial recognition software state police used on footage from the store identified you as the thief. But you didn’t steal anything; the software pointed cops to the wrong guy.

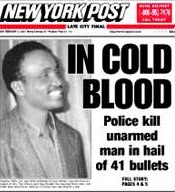

Unfortunately this is not a hypothetical. This happened three years ago to Robert Williams, a Black father in suburban Detroit. Sadly Williams’ story is not a one-off. In a recent case of mistaken identity, facial recognition technology led to the wrongful arrest of a Black Georgian for purse thefts in Louisiana.

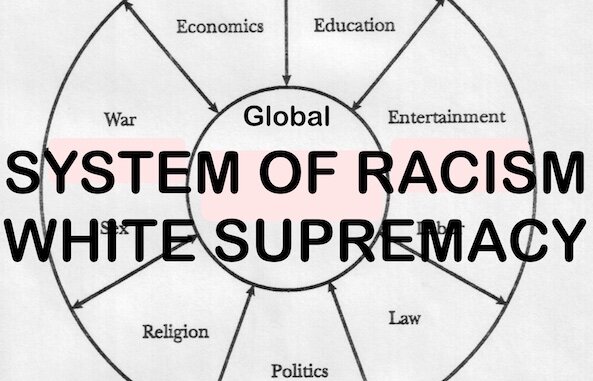

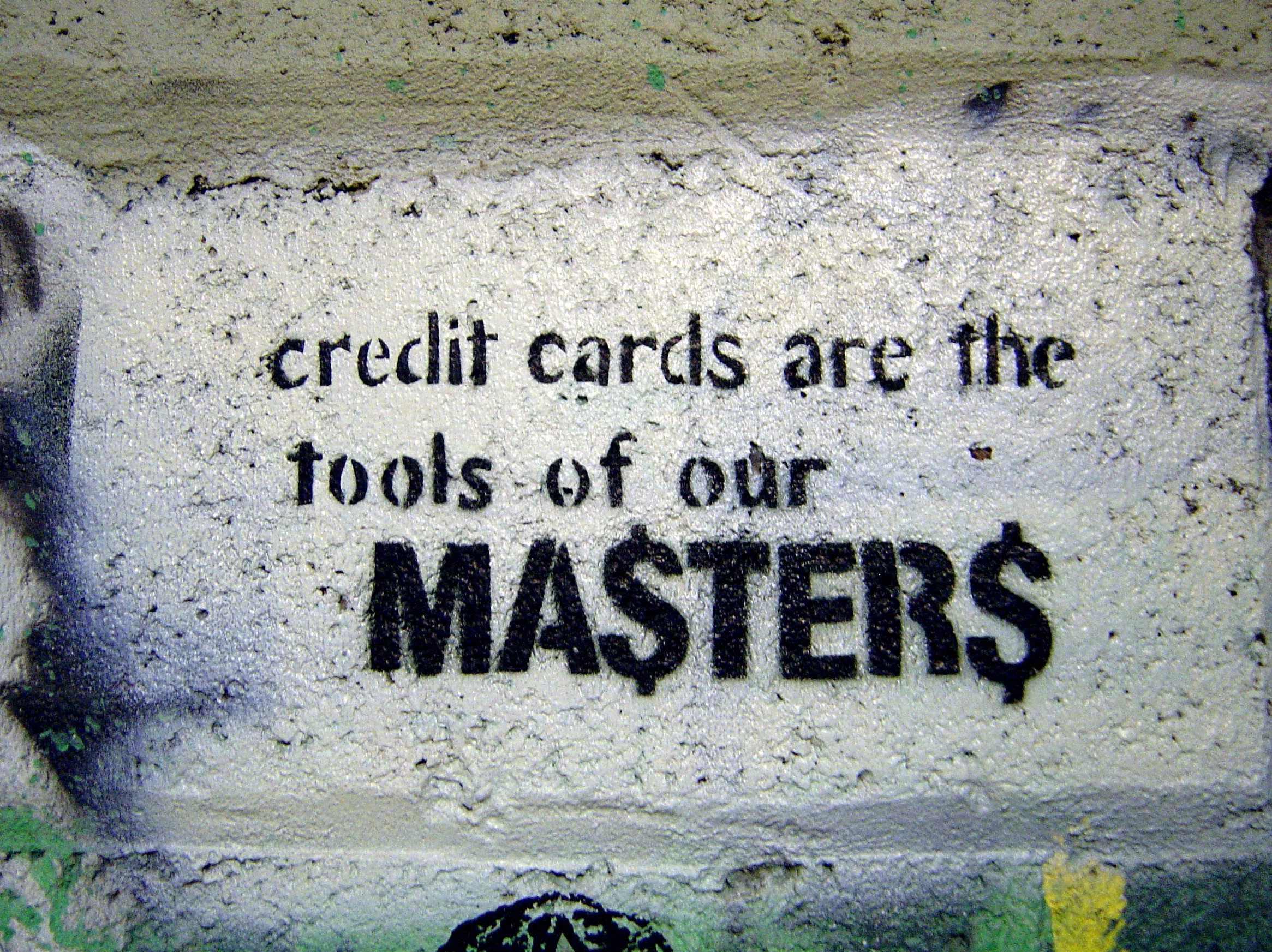

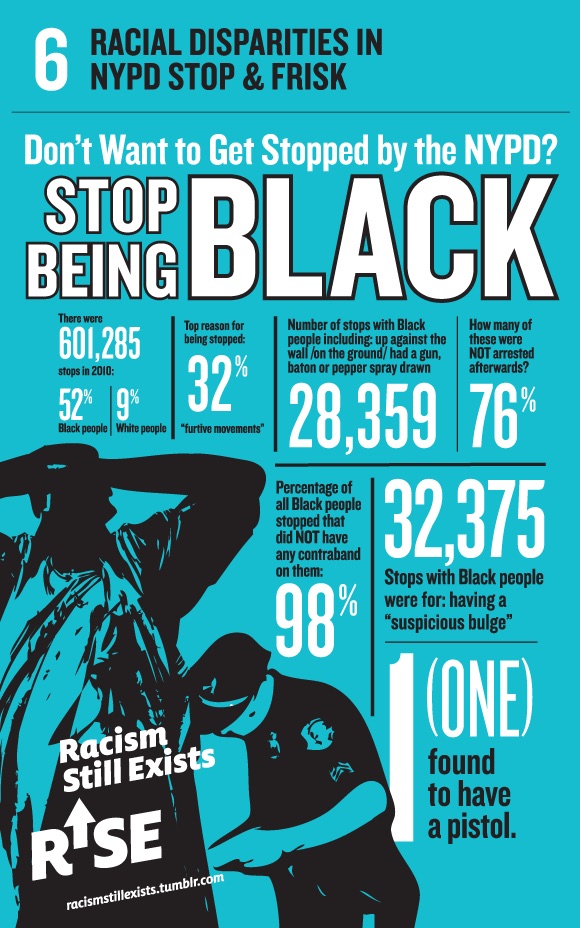

Our research supports fears that facial recognition technology (FRT) can worsen racial inequities in policing. We found that law enforcement agencies that use automated facial recognition disproportionately arrest Black people. We believe this results from factors that include the lack of Black faces in the algorithms’ training data sets, a belief that these programs are infallible and a tendency of officers’ own biases to magnify these issues.

While no amount of improvement will eliminate the possibility of racial profiling, we understand the value of automating the time-consuming, manual face-matching process. We also recognize the technology’s potential to improve public safety. However, considering the potential harms of this technology, enforceable safeguards are needed to prevent unconstitutional overreaches.

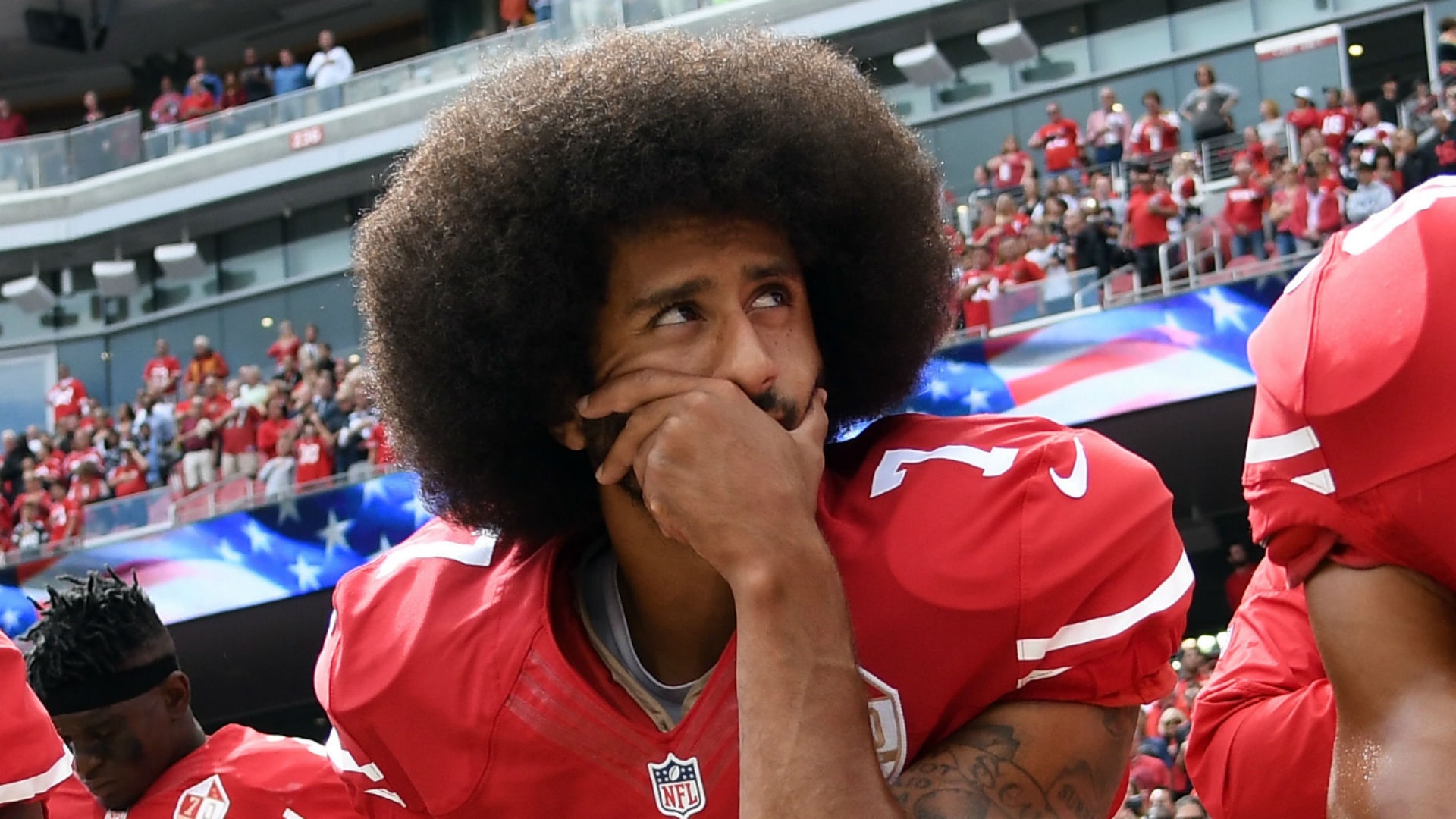

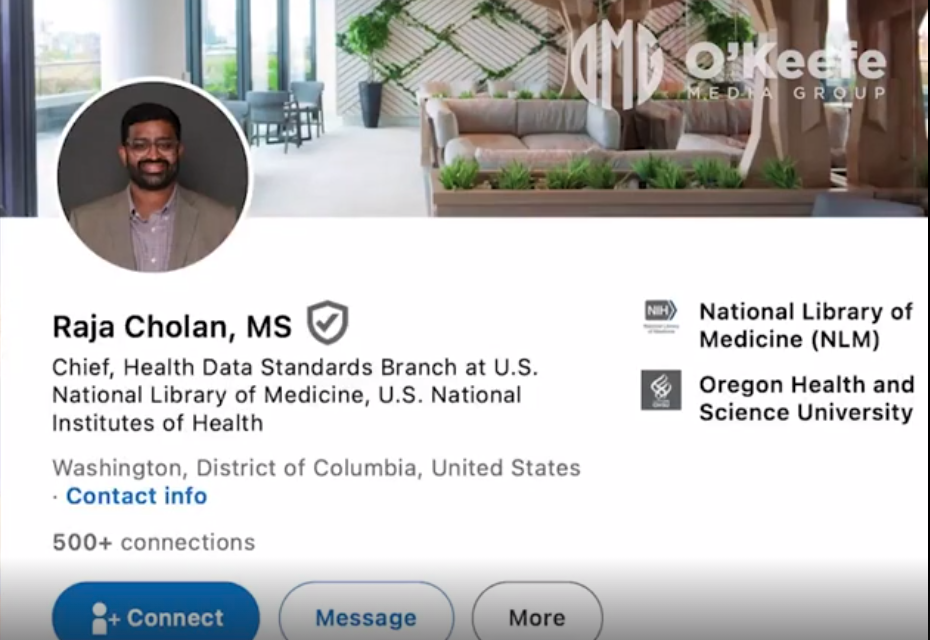

FRT is an artificial intelligence–powered technology that tries to confirm the identity of a person from an image. The algorithms used by law enforcement are typically developed by companies like Amazon, Clearview AI and Microsoft, which build their systems for different environments. Despite massive improvements in deep-learning techniques, federal testing shows that most facial recognition algorithms perform poorly at identifying people besides white men.

Civil rights advocates warn that the technology struggles to distinguish darker faces, which will likely lead to more racial profiling and more false arrests. Further, inaccurate identification increases the likelihood of missed arrests.

Still some government leaders, including New Orleans Mayor LaToya Cantrell, tout this technology's ability to help solve crimes. Amid the growing staffing shortages facing police nationwide, some champion FRT as a much-needed police coverage amplifier that helps agencies do more with fewer officers. Such sentiments likely explain why more than one quarter of local and state police forces and almost half of federal law enforcement agencies regularly access facial recognition systems, despite their faults.

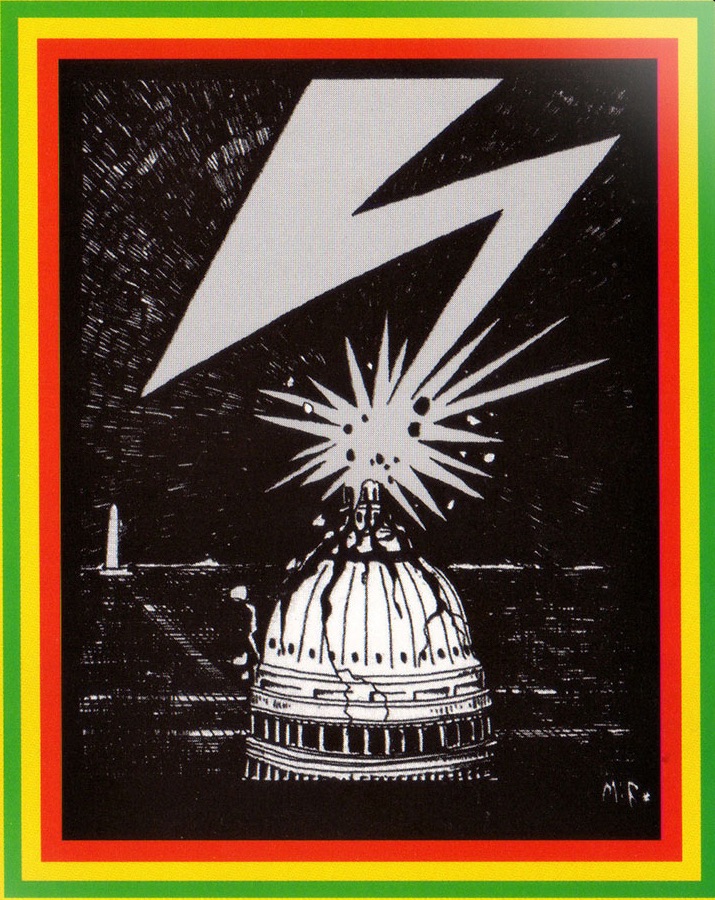

This widespread adoption poses a grave threat to our constitutional right against unlawful searches and seizures. [MORE]